When Tech Design Fails Women: Rethinking Safety in Online Spaces

Published by Foundation for Media Alternatives (FMA) on

When Tech Design Fails Women: Rethinking Safety in Online Spaces

Every time a woman logs into a digital platform, she brings with her not just her voice, but her vulnerability to visibility, to exposure, and sometimes, to violence.

Technology has opened doors for connection, learning, and empowerment. But for many women, the same tools that connect us also become tools of harm. From doxxing and stalking to non-consensual image sharing and sexual extortion, violence in digital spaces mirrors and amplifies the inequalities we face offline.

This form of abuse has a name: Technology-Facilitated Gender-Based Violence (TFGBV). And while we often talk about perpetrators or harmful content, one truth remains underexplored: The design of technology itself can enable harm.

Listening to Women’s Digital Trauma

At the Foundation for Media Alternatives (FMA), we have worked with women, LGBTQ+ and other marginalized communities who have experienced TFGBV firsthand. Their stories reveal the emotional and psychological toll of online abuse – fear, anxiety, sleeplessness, and social withdrawal – forms of trauma that digital systems often fail to acknowledge.

Many female journalists were bombarded by coordinated online attacks – every post met with misogynistic slurs and threats. Another survivor’s private photos were leaked, turning moments of trust into lasting shame. A young queer woman was outed online, losing safety in both her digital and physical communities.

For each of them, the internet became a site of violation, and the trauma was not just emotional – it was architectural.

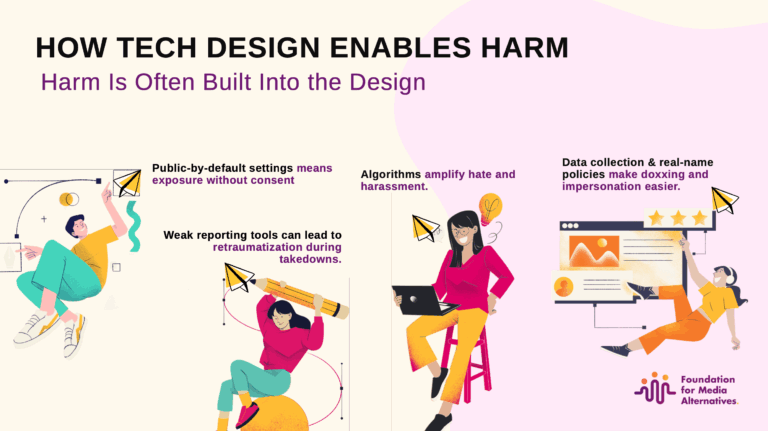

When Tech Design Enables Harm

Many of these harms don’t arise in a vacuum. They thrive in design systems that were not built with safety in mind.

Consider these common features:

Public-by-default settings that expose users’ profiles and posts without consent.

Complicated privacy tools that make protection difficult to navigate.

Reporting systems that are slow, retraumatizing, or simply ignored.

Algorithms that reward engagement, even when that engagement is fueled by hate, outrage, or violence.

These are not “glitches.” They are design choices that reflect whose experiences were centered and whose were not when these platforms were created.

In this sense, online abuse is not only about individual bad actors. It’s also about structural indifference built into technology.

Trauma-Informed and Feminist Design

To truly make digital spaces safer, we must embed care into code.

A trauma-informed approach to technology starts by asking:

Could this feature be weaponized against someone?

Do users have real control over their visibility and data?

Does the platform offer empathy and ease in moments of harm?

Who gets heard and who gets silenced by the algorithm?

A feminist approach to design goes even further. It sees technology not as neutral, but as political. It recognizes that safety, consent, and autonomy are not “add-ons,” but essential foundations.

When we design with women and marginalized users at the center, we do not just prevent harm, we reimagine what care looks like online.

Building Digital Spaces That Heal, Not Harm

The good news: change is possible. Developers, policymakers, and advocates are already rethinking digital ecosystems through a lens of justice and empathy.

But this work demands collaboration between tech creators, survivor networks, and feminist organizations who have long been sounding the alarm.

Because the internet we need is one that protects, not punishes expression.

One that prioritizes healing over hostility, community over clicks, and consent over control.

Call to Action

The fight against online gender-based violence is not only about banning content or catching perpetrators. It is about redesigning the digital world itself to center safety, care, and human dignity.

When we embed these values into technology, we create more than safer platforms, we create a more compassionate internet for everyone. #

If you or someone you know is experiencing any form of online harassment, you may reach out to tfgbv.helpline@proton.me for support, resources, and guidance on how to safely navigate these challenges.

0 Comments